Last week Alex at the Daily Transcript posted on what he thought were the worst and the best aspects of a scientific career, a particularly apt topic for me right now. It’s an important point that any situation, and by extension, any career, will have its plusses and minuses, and all you can hope for is that the former outweigh the latter. I’m finding it hard to say that that is the case for me at the moment.

I did a PhD for two reasons: firstly, and most importantly, I was interested. But secondly, I had no concrete ideas of how to sustain a career with a scientific flavour (which I knew I wanted) outside of academia; at least, not without compromising my ethics and working for the oil or mining industry (whether you agree with that stance or not, you can’t deny that it would have been bad for me). And, despite the low pay, long hours and stress of the last four years, I don’t regret my choice. But if I stay in academia, the road ahead is by no means an easy one. Not that it ever is, of course, but I find myself in a position where I’m not even being employed as a post-doc, but as a technician running the laboratory of my former supervisor. I’m also providing some academic cover (which is nice, but rankles slightly given that those responsibilities mean I should be being paid a lot more than I actually am) but my principal duties are basically keeping the machines running so other people can do their research, rather than doing any of my own. For me, the fun, and the challenge, in science come from the problem solving: finding a gap in current knowledge and trying to work out how to fill it. Without that, the credit side of an academic life is fairly empty, making all the bad aspects just seem all the worse. Additionally, of course, not producing publishable research means that when the remaining papers from my PhD get through the system, there will be no more forthcoming, which is basically career suicide.

So why not find another position somewhere else? Here I come into a major shortcoming of my whole PhD experience; the lack of contacts I have made within my field. This was in many ways a consequence of doing a PhD focussing on New Zealand whilst based in the UK – you’re half a world away from the people who might want to talk to you about it – but (I realise now) it was also a failing of my supervisor (and, when he wasn’t proactive on my behalf, me). If I stay in the game, all that may change eventually – this is the gist of the counter-argument employed by my supervisor/boss, who also habitually waves tempting carrots regarding possible future foreign field work, etc. at me. Of course, he has another agenda: it’s highly convenient for him to have me around, because I’m more qualified than the average technician, cheaper than a post-doc, and much cheaper than a lecturer to cover for him. My question is, is the promise of my position improving at some indefinable time in the future enough for me? More and more I find myself thinking that it is not. The lack of a clear path forward combines with my nagging discontent to bring me to a watershed moment – considering leaving academia altogether.

The magnitude of that decision scares me slightly. Would this be a permanent move? Would I have much choice? I don’t know. I’m also unsure whether I’m not being a bit hard on my current position, which is, at least, a job. Any perspective you can offer, dear reader, would be appreciated.

24 March, 2006

Academia and me – time for a separation?

Posted by

Chris R

at

1:33 pm

1 comments

![]()

Labels: academic life

A budget question for Chancellor Brown

Why is it that you can close inheritance tax loopholes retrospectively, but only apply the marginally increased road tax for the most fuel-inefficient cars to newly-bought ones?

(See here or here.)

Posted by

Chris R

at

9:41 am

0

comments

![]()

Labels: environment

18 March, 2006

Titan seeded by life from Earth?

Sorry for yet another planetary geology post, but this is just cool. The BBC reports a presentation at the Lunar and Planetary Science Conference in Texas by Brett Gladman, from the University of British Columbia. He was interested in the ability of large meteorite impacts, such as the Chicxulub impact which may have killed off the dinosaurs, to eject rocks bearing microbial life into interplanetary space.

[Dr Gladman's team] calculated that about 600 million fragments from such an impact would escape from Earth into an orbit around the Sun. Some of these would have escape velocities such that they could get to Jupiter and Saturn in roughly a million years...

...[They] calculated that up to 20 terrestrial rocks from a large impact on Earth would reach Titan. These would strike Titan's upper atmosphere at 10-15 km/s. At this velocity, the cruise down to the surface might be comfortable enough for microbes to survive the journey.

But the news was more bleak for Europa. By contrast with the handful that hit Titan, about 100 terrestrial meteoroids hit the icy moon.

But Jupiter's gravity boosts their speed such that they strike Europa's surface at an average 25 km/s, with some hitting at 40 km/s. Dr Gladman said other scientists had investigated the survival of amino acids hitting a planetary surface at this speed and they were "not good".

Of course, just because it's possible for terrestrial life to be transferred to Titan doesn't make it likely. And although Titan is rich in organic compounds, it's still an extremely cold (getting on for -200 C) and harsh environment for any bacteria that did make it. An intriguing possibility though...

Posted by

Chris R

at

10:52 am

0

comments

![]()

Labels: geology, planetary geology

15 March, 2006

A brief history of Titan

Amidst all the excitement as Cassini-Huygens successfully penetrates the smoggy atmosphere of Titan, there is a faint twinge of disappointment – and a mystery. Titan’s atmosphere consists of around 5% methane, which is not stable over geological timescales because it is constantly broken down by sunlight. Before Cassini, scientists proposed that methane in the atmosphere was constantly being topped up by evaporation from extensive lakes and seas of hydrocarbons. Sadly, Cassini revealed that the surface is presently free of such features, although flowing liquid hydrocarbons have almost certainly played a role in shaping the landscape in the past. So where is the methane coming from?

The most likely explanation is that the methane is released from inside Titan by geological activity. Titan has a crust that consists mainly of water ice, and the low surface temperatures mean that some of this ice might be in the form of clathrates (also known as gas hydrates), which trap gases like methane. Heat from Titan’s interior, produced either by radioactivity in a silicate core or tidal heating induced by the gravitational pull of Saturn, could possibly destabilise these clathrates, releasing methane into the atmosphere.

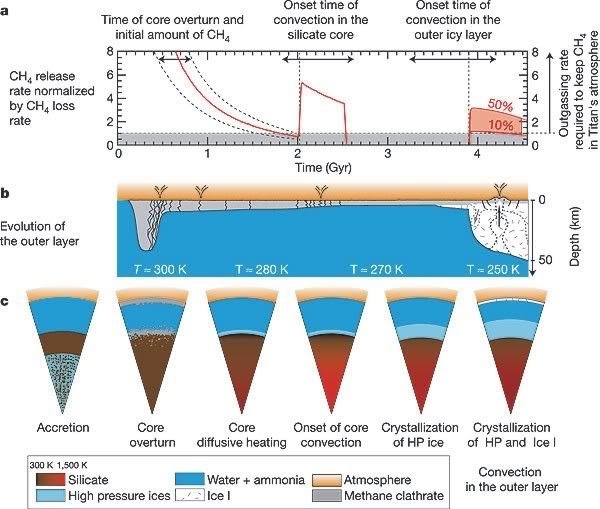

Although Cassini has spotted at least one example of what looks like a cryovolcano, we still know very little about the forces which are driving this activity on Titan, the rates at which it might be occurring, and most importantly whether it is sufficient to explain all of the surface features we are seeing. A paper by Tobie et al.[1] in the March 2 issue of Nature attempts to shed some light on these questions, by presenting a new model of Titan’s interior, and how it has evolved since it formed. This model is nicely summarised in their Figure 2: a plots the rate of methane outgassing over time predicted by the model; b and c show the changing internal structure of the upper 50 km and the whole moon, respectively.

The model proposes that beneath the icy crust is a ‘mantle’ of ammonia-rich water, similar to the one inferred beneath the surface of Europa. In order to stop all of the methane escaping Titan by outgassing during accretion, the authors suggest that the initial proto-core consisted of a mixture of rock and ice, including clathrates, beneath a silicate shell. Because ice is less dense than silicate, this arrangement is unstable – convective overturn would eventually move the silicate to the inner core, and the ice and clathrates would migrate outwards. The low density of the clathrates would allow them to move to the top of the water layer and accumulate at the base of the crust; this thickening inhibited surface heat flow, causing the subsurface ocean to warm to the point where the clathrates started to dissociate and release methane into the atmosphere.

This is the first of three predicted episodes of atmospheric methane release during the last 4.5 billion years, and lasted from ~4 to 2.5 billion years ago. The second episode occurred 2.5 to 2 billion years ago, when the silicate core was heated enough by radioactive decay to start convecting, increasing the heat flow to the surface and triggering further clathrate dissociation.

The methane we presently observe in the atmosphere is due to the third (and final) outgassing episode. As Titan has gradually cooled since 2 billion years ago, the subsurface ocean has begun to freeze, causing a thickening layer of ice to build up beneath the clathrates and outer icy crust. In the last half a billion years this layer has become so thick that convection is now a more efficient method of heat transfer than conduction, leading to upwelling ‘hot’ (relatively!) thermal plumes which drive localised dissociation and venting of methane at cryovolcanos.

This model explains many of the recent observations of Titan, but will stand and fall on the basis of two predictions. The first is the existence of a subsurface ocean; Cassini is conveniently about to start a series of flybys designed to probe the interior of Titan using the Radio Science Subsystem, so we should know the answer to that one fairly soon. The second regards the age of surface features; the model suggests that the current episode of methane release is much more limited in scale than the first two, meaning that most of the large-scale erosional features being observed should date to the last major outgassing 2 billion years ago. If the model is correct, this was also the last time that hydrocarbon seas existed on the surface of Titan. Huygens, it seems, arrived a little too late to surf some oily waves.

[1] Nature 440, p61-64 (doi)

Posted by

Chris R

at

10:45 pm

0

comments

![]()

Labels: geology, planetary geology

14 March, 2006

Latest from Stardust

Via the BBC: NASA has just released some preliminary results from the Stardust probe, which brought dust particles from Comet Wild-2 back to Earth in January. Intriguingly, it seems that a significant proportion of the material collected consists of minerals, such as olivine and other silicate minerals, which only form at temperatures >1000 C.

Back when the probe first landed I explained how examining the composition of the cometary material could help to constrain our models of planetary formation. What these results may be telling us is that the planetary nebula which eventually formed our solar system was a much more dynamic place than we have supposed up to now. From the NASA press release:

"We have found very high-temperature minerals, which supports a particular model where strong bipolar jets coming out of the early sun propelled material formed near to the sun outward to the outer reaches of the solar system," said Michael Zolensky, Stardust curator and co-investigator at NASA's Johnson Space Center, Houston. "It seems that comets are not composed entirely of volatile rich materials but rather are a mixture of materials formed at all temperature ranges, at places very near the early sun and at places very remote from it."

In the model referred to (known as the “X-wind model” – see here for some background), the “strong bipolar jets” are thought to be generated by the interaction of a rapidly spinning accretion disk with the magnetic field of the growing central protostar. This model was originally proposed to explain similar mixing of material which formed at different temperatures (and therefore locations) in asteroids, but the fact that we are seeing material from the very inner solar system being transported right out into the outer reaches where comets formed means that these jets must have been very powerful indeed.

As always, of course, there is another alternative – this material might predate the solar system. Having formed close to another, older, star, it was blown into interstellar space by a supernova, and then incorporated into the planetary nebula which eventually became our solar system. Measuring some isotopic ratios for these grains should help to distinguish between these two possibilities.

Posted by

Chris R

at

10:45 pm

0

comments

![]()

Labels: geology, planetary geology

11 March, 2006

'Teach the controversy', UK style

It's odd really. My introduction to the world of blogging came through my exploration of the whole ID/creationism issue (which got me reading the Panda's Thumb, which introduced me to Pharyngula and many other science blogs), but I didn't really envisage writing about it much on my own blog. Here in the UK the debate has always seemed to be much more low-level, not really involving the government and courts the way it has in the US. Now, however, the publicity around the Dover trial seems to have stirred something up, or at least started our media examining attitudes to ID and creationism in this country more closely than they have in the past. And the picture has not been especially encouraging, as I have written about here and here.

Now I read in The Times that the OCR examinations board is planning to reference creationism in its new GCSE biology syllabus (also reported by the BBC here). Do we need to start panicking?

If you download the new OCR syllabus (pdf) and examine the relevent section (Item B2f: Survival of the Fittest, on pages 33-35) you will find no mention of ID concepts, or even the "weaknesses in the theory of evolution" rubbish that people are trying to write into the syllabuses in the US. There are two "Assessable learning outcomes" which probably sparked the hysteria:

- Explain that the fossil record has been interpreted differently over time (e.g. creationist interpretation) (Page 35, top).

- Explain the reasons why the theory of evolution by natural selection met with an initially hostile response (social and historical context) (Page 35, bottom).

Well, the language there could have been a bit tighter, perhaps, but given that the treatment of evolutionary concepts appears sound on the whole, I think this is aimed at discussing creationism as a failed explanation from the 19th century, rather than a credible modern alternative to evolutionary theory (there is also a discussion of Lamarackian inheritance). In other words, I agree with this comment from Flitcraft in the inevitable discussion over at Pharyngula:

There is the possibility that a Creationist teacher might seize on [those sentences] as an excuse to do some mischief, but given the way the syllabus covers things like debunking Lamarck, I think this is an attempt to teach some history of science rather than a pop at putting a Creationist trojan horse into the exam syllabus.

The exam board have also posted this statement:

At OCR, we believe candidates need to understand the social and historical context to scientific ideas both pre and post Darwin.

In our Gateway Science specification, candidates are asked to discuss why the opponents of Darwinism thought the way they did and how scientific controversies can arise from different ways of interpreting empirical evidence (my emphasis).

Creationism and 'intelligent design' are not regarded by OCR as scientific theories. They are beliefs that do not lie within scientific understanding.

The last paragraph is strong enough to offset the fact that the statement I've bolded sounds a little bit weasel-like. Interestingly, this part is lifted almost verbatim from the National Curriculum itself, where, rather suspiciously, evolutionary theory is singled out as a 'scientific controversy'. The exam boards are not the people we should be keeping our eyes on, perhaps.

Posted by

Chris R

at

6:06 pm

1 comments

![]()

Labels: antiscience

08 March, 2006

Caffeinated Catch-22

The way the media reports on food health issues generally brings out the cynic in me, which notes that if you wait around long enough, the new ‘wonder food’ will be implicated as a possible carcinogen. Reporters often seem to miss the obvious fact that both could be true, depending on circumstances (for example, something that is very good for you in small doses can be very bad for you in large doses).

So I generally ignore the periodic announcements that coffee/caffeine is good/bad for me. I have a long standing love affair (‘addiction’ is such an ugly word) with coffee – proper coffee, that is, not that instant or Starbucks rubbish. With apologies to my fellow Brits for the blasphemy, I much prefer it to tea. I drink 1-3 cups a day, and have yet to see convincing evidence for the insane mortality rate that would exist in academic circles if it was too bad for you, so am on the whole fairly happy.

Now I find out, in a widely reported study, that depending on which version of a particular gene I have, my habit is either increasing my risk of a heart attack by 36%, or reducing it by 22%. Here’s hoping I have two copies of the ‘fast’ enzyme gene.

Posted by

Chris R

at

2:21 pm

0

comments

![]()

Labels: daft science

07 March, 2006

Look! Important people agree with me!

The BBC reports that the Centre for Sustainable Development - the Government’s own independent watchdog – has reported that, as I have been arguing, nuclear power is not the magic bullet for our energy woes.

From the SDC press release:

Based on eight new research papers (which you can read for yourself here), the SDC report gives a balanced examination of the pros and cons of nuclear power. Its research recognizes that nuclear is a low carbon technology, with an impressive safety record in the UK. Nuclear could generate large quantities of electricity, contribute to stabilising CO2 emissions and add to the diversity of the UK’s energy supply.

However, the research establishes that even if the UK’s existing nuclear capacity was doubled, it would only give an 8% cut on CO2 emissions by 2035 (and nothing before 2010). This must be set against the risks.

The report identifies five major disadvantages to nuclear power:

- Long-term waste – no long term solutions are yet available, let alone acceptable to the general public; it is impossible to guarantee safety over the long- term disposal of waste.

- Cost – the economics of nuclear new-build are highly uncertain. There is little, if any, justification for public subsidy, but if estimated costs escalate, there’s a clear risk that the taxpayer will be have to pick up the tab.

- Inflexibility – nuclear would lock the UK into a centralised distribution system for the next 50 years, at exactly the time when opportunities for microgeneration and local distribution network are stronger than ever.

- Undermining energy efficiency – a new nuclear programme would give out the wrong signal to consumers and businesses, implying that a major technological fix is all that’s required, weakening the urgent action needed on energy efficiency.

- International security – if the UK brings forward a new nuclear power programme, we cannot deny other countries the same technology (under the terms of the Framework Convention on Climate Change). With lower safety standards, they run higher risks of accidents, radiation exposure, proliferation and terrorist attacks.

On balance, the SDC finds that these problems outweigh the advantages of nuclear. However, the SDC does not rule out further research into new nuclear technologies and pursuing answers to the waste problem, as future technological developments may justify a re-examination of the issue.

The chairman of the SDC, Jonathon Porritt, had some strong words to accompany the report:

“Instead of hurtling along to a pre-judged conclusion (which many fear the Government is intent on doing), we must look to the evidence. There’s little point in denying that nuclear power has benefits, but in our view, these are outweighed by serious disadvantages. The Government is going to have to stop looking for an easy fix to our climate change and energy crises – there simply isn’t one.”

Of course, this is only one of many commissions and think-tanks likely to weigh into this debate in the coming months – and it’s not exactly a huge shock that a body concerned with sustainable development views nuclear energy with a somewhat sceptical eye. But it’s cheering to find my concerns being advocated by people the Government can less easily ignore. Which isn’t to say that they won’t, of course…

Posted by

Chris R

at

2:24 pm

0

comments

![]()

Labels: environment, nuclear

04 March, 2006

Misusing “measurement”

Stoat (recently assimilated by the Scienceblog collective) has an excellent post up about the new estimate of ice accumulation in the Antarctic, which is based on a very clever idea: using the GRACE satellites to measure the changing gravity field (and hence mass) over the Antarctic region. The original paper in Science uses three years of data to suggest that, although some previous altimeter studies have shown some parts of the ice sheet are thickening, there is an overall loss of 70-230 km3 of ice per year for the whole Antarctic ice sheet, equivalent to a global sea level rise of 0.2-0.6 mm/year.

Stoat points out that many write-ups, such as the one in The Guardian, miss an important consideration when examining this result:

…note that the results depend very heavily on the adjustment for isostatic rebound. This is because Antarctica is smaller now than at the height of the last glacial; hence lighter; hence the rock underneath is slowly moving upwards (post-glacial-rebound; PGR). GRACE measures gravity anomalies; ie weight of rock and snow (errm, and actually also weight of nearby water masses, which could be a problem on so short a scale as 3 years). So if the rock is going up, that needs to be subtracted to get mass of ice. The "problem" is that this is not a small term; see the papers figure 2, which shows that the trend is flat, without the correction for the rebound term.

Not every media organisation missed this (the BBC didn’t, to their credit), but it occurs to me that this sort of thing is quite common. When talking about our work, we scientists should be a bit more careful about saying “measure” when we mean something more like “infer”. Particularly in historical sciences like geology, we are often examining the effects of events rather than the events themselves, and we are making assumptions about how the value of the thing we are measuring relates to the thing we want to measure. Sometimes (as in this case) the effects of other factors are not completely clear. Sometimes we come to realise that our assumptions were not quite right, which changes our interpretation.

Scientists understand all this, of course; in fact, we spend most of our time questioning and testing those assumptions, which is why they do sometimes change. I just worry that in our eagerness to make our results understandable to the wider public, we sometimes leave out too much of this process; and in contentious issues like climate change, where sceptics love to play up the whole, “so now they’re seeing this, when last week they said the complete opposite!” angle, our carelessness can come back to haunt us.

Posted by

Chris R

at

9:33 am

0

comments

![]()

Labels: geology

01 March, 2006

Why it’s time to junk the National Grid

This post continues from where I left off in the last.

You could argue that I’m being premature by slagging off government energy policy when they’ve not announced it yet. But there is a clear intent to put nuclear back on the table, both in public statements and in the consultation document itself, which (if you like your statistics well-supported, look away now) mentions “nuclear” 108 times in 77 pages, in comparison to only 55 times in the much longer (142 pages) 2003 White Paper.

It won’t work.

Firstly (and forgive me for recycling old material here), electricity generation accounts for only 35% of our energy usage, so as the consultation document itself points out:… even if we had a completely carbon-free generation mix but took no measures in other sectors, we would fall far short of our 2050 target.

And, again repeating myself, if you take into account the costs of refinement and decommissioning, nuclear power is only carbon free at the point of generation (incidentally, I can now point you directly to the work of Jan Willem Storm van Leeuwen and Philip Bartlett Smith here). Once we start refining low-grade uranium ore to meet the increased demand of new nuclear power stations, we may well use more CO2 than we save at the generation end. Besides, replacing fossil fuels with uranium is merely replacing one non-renewable energy source with another (from a geological perspective, a less renewable one); current known uranium ore reserves will last no more than 50 years even at present levels of demand. As a…ok, the, commentator on my first post pointed out, this ignores the potential of reprocessing and breeder reactors, but these are technically difficult and expensive technologies, which is why they’ve never been taken up in a big way in the first place.

To be fair, the consultation document does actually refer to these facts, (although it hides them in one of the appendices); the argument seems to be that nuclear is still the best option despite them. Renewables are not being ignored, and are projected to generate 15% of our electricity by 2002 (up from <5% now), but problems of cost and the intermittent supply they add to the National Grid seems to prohibit them from taking up a larger role. So, the government seems to be concluding that there is currently no sustainable solution other than using the nuclear option to buy time while we wait for some amorphous ‘new technology’ to appear and fix things overnight.

However, this gloomy outlook ignores a wide potential avenue for cutting emissions. Our energy distribution system dates back many decades, from a time when coal was our major source of energy. It made sense to export electricity rather than fuel from the isolated coal mines, so the present system of generating electricity at big power stations and then distributing it around the country via the National Grid was developed. But this system has disadvantages: about two thirds of the energy in the fuel driving the turbines is lost as waste heat, both at the power station itself and from resistive heating in the power lines and transformers of the transmission system.

Ironically, heating accounts for the overwhelming majority of energy usage in the domestic and industrial sectors. This coincidence has led to the development of cogeneration – a combined heat and power (CHP) system where waste heat from power generation is used to heat water for supply to local buildings. You save twice, because you’re wasting less of the energy released by burning fossil fuels, and you don’t have to use additional energy to provide space heating.

The problem is that the benefits of cogeneration are limited when you have a small number of big, widely distributed power stations, because it is only practical to heat buildings which are close by. What is needed is a more distributed generation network, with many smaller cogeneration plants supplying electricity and hot water to the local area. This is no theoretical pipe dream, either – a scheme in Woking has cut the emissions for council buildings by 77% in the last 15 years. I was surprised to find out that we even have a scheme here in Southampton.

Therefore, rather than replacing our large, isolated power stations with more of the same, why not build a distributed network of smaller cogeneration plants? We even have the gas network in place with which to fuel them! Such a strategy is explored in some detail by a Greenpeace report here (pdf – discovered via Camden Lady who has posts on this subject here and here - see also the World Alliance for Decentralised Energy site). They suggest that natural gas can be used as a ‘bridging technology’ to develop a distributed infrastructure, which can gradually incorporate more renewable energy (intermittency is less of a problem for a local grid, where demand is also intermittent, and heat and battery storage technologies are more practical), and any new technologies, such as hydrogen power, as they develop.

Thinking globally, it is obviously no good for the UK to just cut our own greenhouse gas emissions, which account for only 2% of global output, if the rest of the world does not follow. Leading the way with distributed generation technologies will show developing countries, which don’t have a national electricity network, a more environmentally friendly way to industrialise (not to mention being a nice little earner for us).

Even the consultation document admits that there is some promise in this area (although dodgy statistics show that distributed generation is down to 3 mentions from 27 in the 2003 White Paper):

Micro electricity generation technologies (including micro-CHP) could potentially provide up to ~30 to 40% of UK electricity demand by 2050 given the right market conditions.

But this has already been achieved in northern European countries such as the Netherlands and Denmark. Do we really need 45 years to catch up with them? The relative lack of enthusiasm (reflected by the fact that our current regulatory framework makes setting up local power generation very difficult) apparently stems from thinking that smaller-scale power generation is something which needs to be bolted on to the existing system, rather than replacing it. And the time is ripe for replacement:

Maintaining the reliability of electricity supplies will require very substantial levels of new investment as … ageing distribution networks [are] maintained and renewed

Billions of pounds are going to be needed in the next few years to upgrade the National Grid. Why not change it instead, whilst our energy destiny is still in our own hands? It’s probably not as simple as I’d like to think. Maybe the changeover to a distributed system would take a long time, maybe we’d still need some new nuclear power stations to help bridge the gap (I can’t see why but you never know). But I’d like to see it as the target. I’d like to see the government show some real vision.

Posted by

Chris R

at

11:08 pm

2

comments

![]()

Labels: environment